Adds, muls on my superscalar processor - part 2

The question

Since the findings in my previous post left me with more questions, I wanted try and get deeper into one of them in this post:

Why is Add3 faster than Add2?

These were the timing results:

Benchmark Time CPU Iterations

-----------------------------------------------------

BM_Add 56002369 ns 55988747 ns 13

BM_Add2 174727175 ns 174686940 ns 4

BM_Add3 137654951 ns 137638465 ns 5

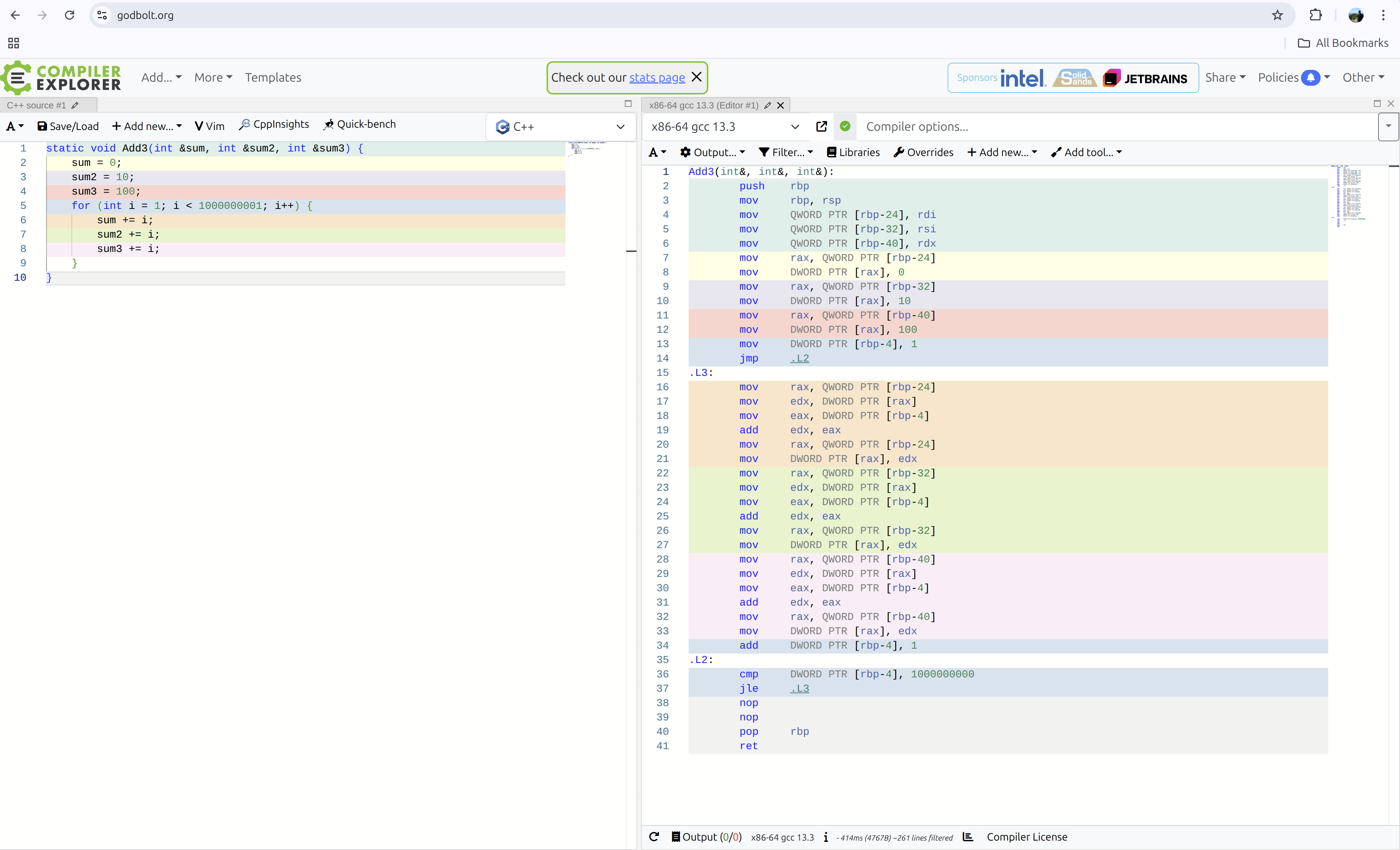

For Add3, in Compiler Explorer, you can see the instructions for adding up 1 billion ints, into 3 separate variables here:

There are 3 sections after label .L3, for the actual accumulations in the loop. And Add2 is similar with 2 such sections for its 2 variables.

Yet, Add3 is faster that Add2. So, I had to find out why.

Matching compiler flags

To avoid any discrepancies, one thing I should really do is replicate my benchmark project's compiler flags into Compiler Explorer. The project is a CMake project created through CLion, and I have a Debug and Release build configuration. The Release configuration is what I use to get the benchmark timings.

To see my compiler flags, I've enabled verbose makefile in cmake:

set(CMAKE_VERBOSE_MAKEFILE ON)

I've to ensure that Unix Makefiles are generated for builds (not Ninja, or something else).

Then upon build, I can see the full command-line like so:

/usr/bin/c++ -DBENCHMARK_STATIC_DEFINE -I/home/nolan-veed/nolan-veed/benchmarks/cmake-build-release/_deps/benchmark-src/include -O3 -DNDEBUG -std=gnu++23 -fdiagnostics-color=always -MD -MT CMakeFiles/benchmarks.dir/test_superscalar.cpp.o -MF CMakeFiles/benchmarks.dir/test_superscalar.cpp.o.d -o CMakeFiles/benchmarks.dir/test_superscalar.cpp.o -c /home/nolan-veed/nolan-veed/benchmarks/test_superscalar.cpp

The main bits that could generate different machine code are, which is optimisation, non-debug preprocessor macro and C++ standard selection:

-O3 -DNDEBUG -std=gnu++23

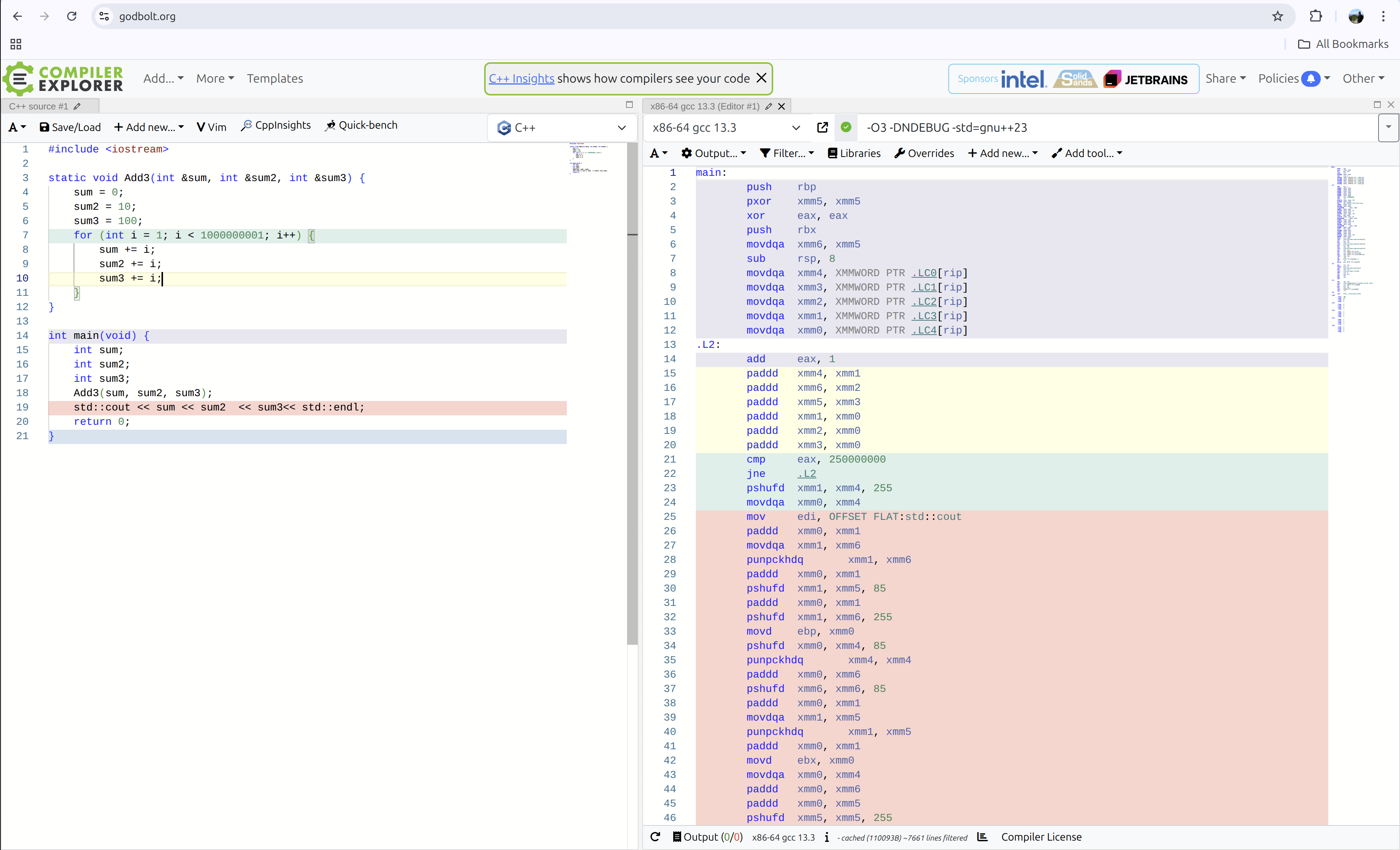

But, when I put some of these flags into Compiler Explorer I don't get any output. It looks lik this is because the function is actually optimised out as there are no callers to it. We need a way to use those variables, so I'll just print it like this:

Aha! It's not as simple as we saw earlier. In "release" mode, the code generated is using vector instructions (more specifically, SSE2). For example, the paddd is a the packed addition. The xmm registers are 128-bits wide, so can contain 4 ints and add them simultaneously. Only 250 million iterations are done instead of 1 billion.

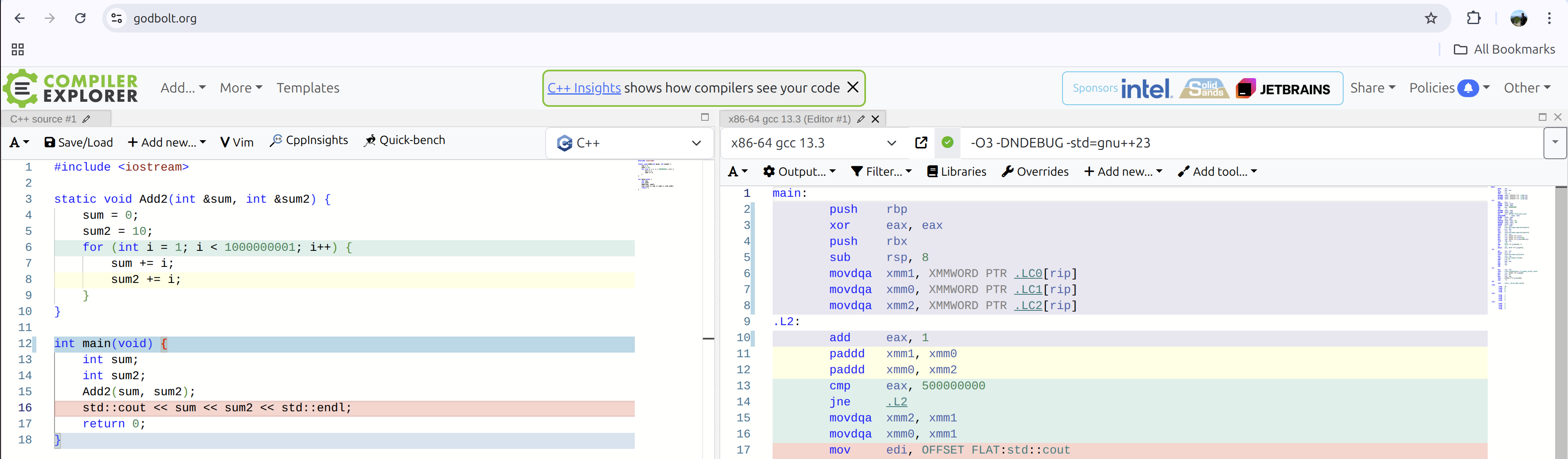

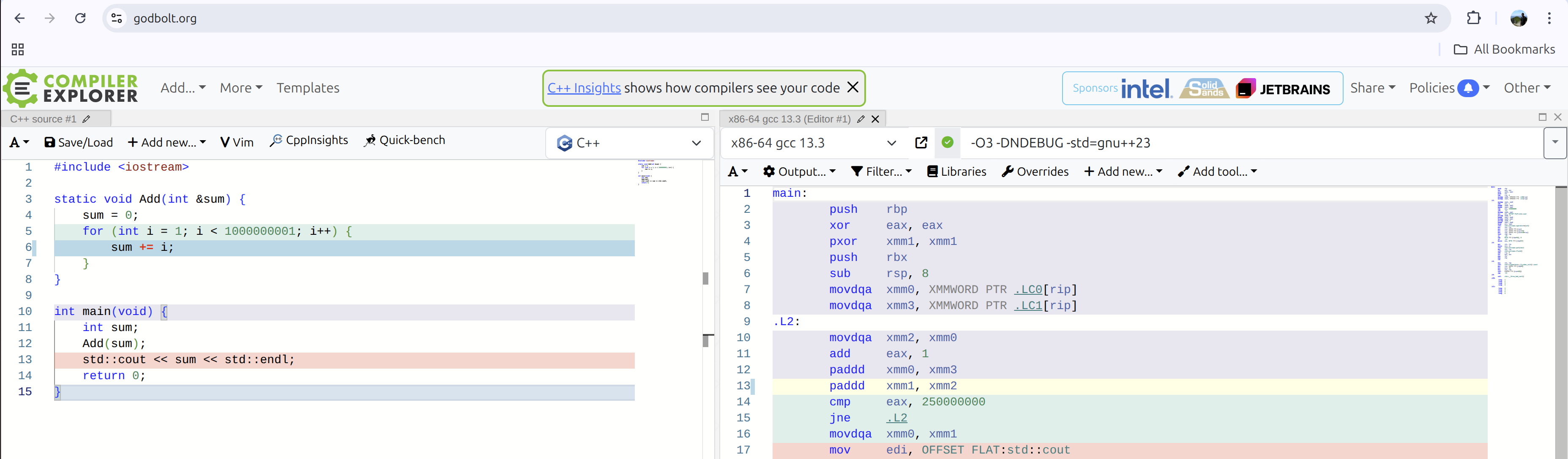

That was Add3, what about Add2 and Add:

Look closely at the cmp instruction!

Findings

Each loop iteration is essentially between the label .Lxxx to the instruction jne .Lxxx.

- The number of iterations in

Add3are less than those ofAdd2.- The

cmp eax, 250000000inAdd3is iterating half the number of times compared to thecmp eax, 500000000inAdd2 Add3is likely to be faster thanAdd2because of this.

- The

- The number of iterations in

Add3are the same as those ofAdd.Add3is likely to be slower thanAddbecause of more additions.

The actual instructions are emitted by the compiler are awesome. We'll need to refer to them manuals for them. May be another day.

Enabling assembly output locally

To be extra sure, I can look at the code generated on my machine, and then compare that with what Compiler Explorer shows me.

GCC gives you the ability to generate the assembly output for my generated instructions. We need these flags:

-save-temps -masm=intel -fverbose-asm -Wa,-adhlmn=main.lst

Here are a few snippets of the 3 functions.

For Add3:

# /home/nolan-veed/nolan-veed/benchmarks/test_superscalar.cpp:152: static void BM_Add3(benchmark::State &state) {

pxor xmm6, xmm6 # vect__18.418

movdqa xmm4, xmm10 # vect__18.418, vect__18.418

movdqa xmm2, xmm9 # vect_vec_iv_.416, vect_vec_iv_.416

xor eax, eax # ivtmp.421

movdqa xmm5, xmm6 # vect_sum3_47.417,

movdqa xmm1, xmm8 # vect_vec_iv_.415, vect_vec_iv_.415

movdqa xmm0, xmm7 # vect_vec_iv_.414, vect_vec_iv_.414

.p2align 4,,10

.p2align 3

.L128:

add eax, 1 # ivtmp.421,

# /home/nolan-veed/nolan-veed/benchmarks/test_superscalar.cpp:27: sum3 += i;

paddd xmm4, xmm0 # vect__18.418, vect_vec_iv_.414

paddd xmm5, xmm1 # vect_sum3_47.417, vect_vec_iv_.415

paddd xmm6, xmm2 # vect__18.418, vect_vec_iv_.416

paddd xmm0, xmm3 # vect_vec_iv_.414, tmp157

paddd xmm1, xmm3 # vect_vec_iv_.415, tmp157

paddd xmm2, xmm3 # vect_vec_iv_.416, tmp157

cmp eax, 250000000 # ivtmp.421,

jne .L128 #,

For Add2:

# /home/nolan-veed/nolan-veed/benchmarks/test_superscalar.cpp:143: static void BM_Add2(benchmark::State &state) {

xor eax, eax # ivtmp.439

movdqa xmm1, xmm5 # vect_sum2_39.435, vect_sum2_39.435

movdqa xmm0, xmm4 # vect_vec_iv_.434, vect_vec_iv_.434

.p2align 4,,10

.p2align 3

.L143:

add eax, 1 # ivtmp.439,

# /home/nolan-veed/nolan-veed/benchmarks/test_superscalar.cpp:16: sum2 += i;

paddd xmm1, xmm0 # vect_sum2_39.435, vect_vec_iv_.434

paddd xmm0, xmm2 # vect_vec_iv_.434, tmp111

cmp eax, 500000000 # ivtmp.439,

jne .L143 #,

For Add:

# /home/nolan-veed/nolan-veed/benchmarks/test_superscalar.cpp:135: static void BM_Add(benchmark::State &state) {

xor eax, eax # ivtmp.457

pxor xmm1, xmm1 # vect_sum_31.453

movdqa xmm0, xmm4 # vect_vec_iv_.452, vect_vec_iv_.452

.p2align 4,,10

.p2align 3

.L158:

movdqa xmm2, xmm0 # vect_vec_iv_.452, vect_vec_iv_.452

add eax, 1 # ivtmp.457,

paddd xmm0, xmm3 # vect_vec_iv_.452, tmp104

# /home/nolan-veed/nolan-veed/benchmarks/test_superscalar.cpp:7: sum += i;

paddd xmm1, xmm2 # vect_sum_31.453, vect_vec_iv_.452

cmp eax, 250000000 # ivtmp.457,

jne .L158 #,

movdqa xmm0, xmm1 # tmp98, vect_sum_31.453

psrldq xmm0, 8 # tmp98,

paddd xmm1, xmm0 # _11, tmp98

The iteration loops are similar to what Compiler Explorer shows.

Phew!