Adds, muls on my superscalar processor

Preamble

I'm always curious about how long things take on machines, and wanted to learn more. So, I wrote some benchmarks to run on my machine. It's is a Dell XPS 15 9510 laptop, with a 11th Gen Intel(R) Core(TM) i9-11900H @ 2.50GHz, so it's not super new, but I use it on a daily basis. And I'm currently on Ubuntu 24.04.2 LTS.

For this process, needed a micro-benchmarking library, so choose the Google benchmark. It was pretty easy to set up as a CMake project and I was quickly able to focus on writing some C++ code.

Starting small

So, I know that superscalar processors can execute multiple instructions concurrently. And they achieve this through multiple pipelines and execution units. I wanted to focus on additions and multiplications of integers.

May be I can check how long it take to add 1 billion integers: ~56ms. What if I have some more additions in there?

May be I can check how long it takes to multiply 1 billions integers: ~629ms. What is I have some more multiplications in there?

I then simply added more variables and wrote Add2, Add3..., Mul2, Mul3.... I also wrote AddMul that combines a few additions and multiplications.

There's probably a nicer way of writing a single C++ templated function with template parameter packs for all this. But, I wanted to keep it simple at this point as I was exploring. I should also look at other operations so there's more to do here.

Interesting learnings

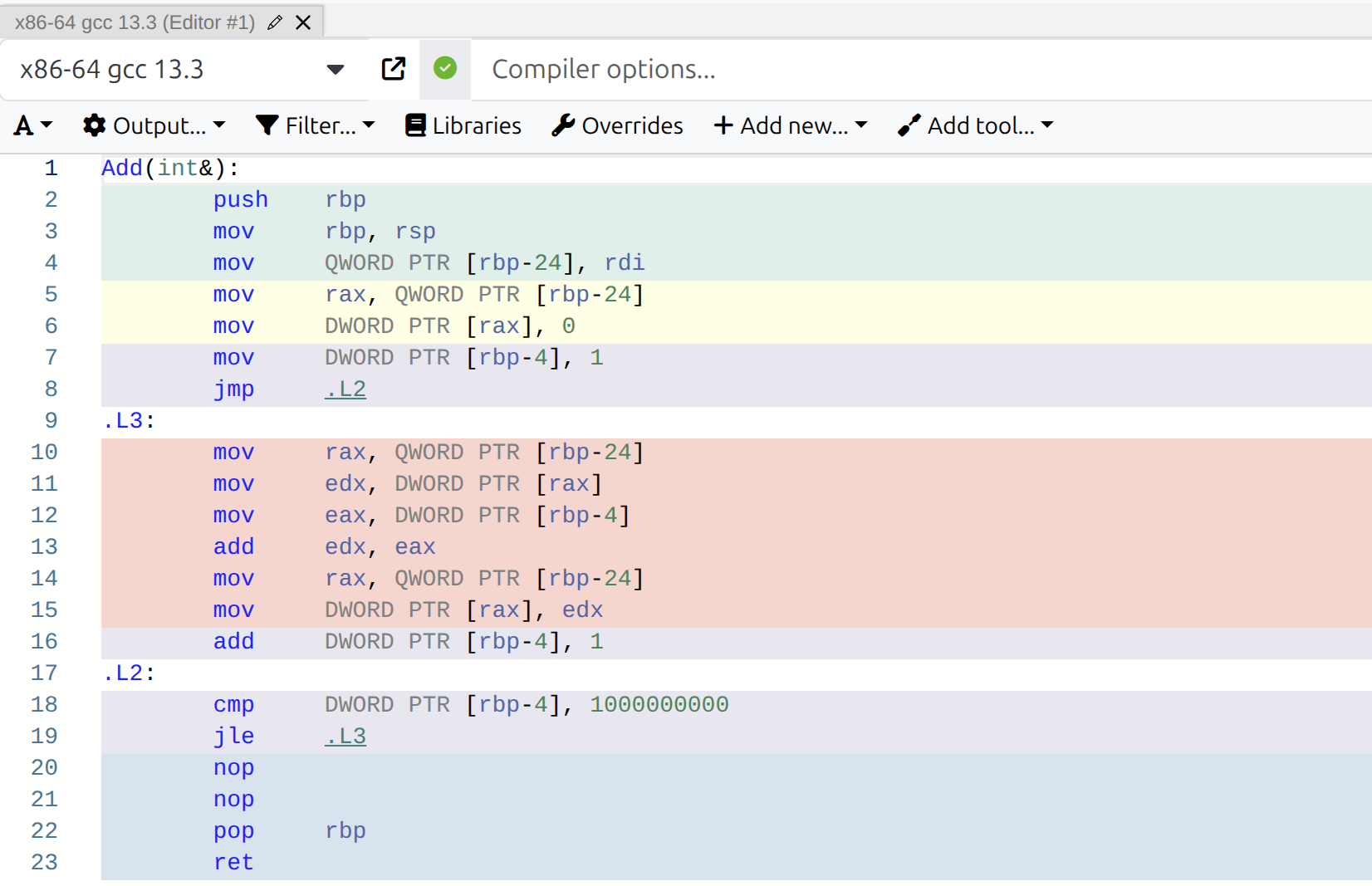

OK. So, all the benchmarks in this source file include an extra addition due to the incrementing i. This can be seen in the instructions here:

The timing results are here

- Every extra add increases the time.

- But, Add3 is faster than Add2.

- Why? I'll have to look deeper.

- Multiplying takes way longer than adding.

- ~10x possibly?

- Multiplying 2 or 3 variables take the same time as multiplying 1.

- There are possibly 3 multiplier units?

- Then multiplying more increases the time by 20ms.

- Why 20ms increments?

- AddMul takes the same time as Mul3.

- We can hide the cost of many additions behind multiplications.

- This is like a performance optimisation by changing the code structure.

OK. A few learnings and more questions.

Hmm... May be this was a bit of a waste of time... but, I do like the incremental process of writing benchmarks. And I hope this does not turn into an unhealthy addiction. :D

Updates

- I found out why

Add3is faster thanAdd2. See this post.